Our Mission

Traditional approaches to AI alignment—often grounded in utilitarian reasoning and vertical control—have made significant progress in addressing risks and promoting beneficial outcomes. Yet, as our societies and technologies grow more interconnected, there is increasing recognition that relational, process-based perspectives can complement and enrich these efforts, especially in contexts where multiple agents, values, and relationships interact.

Drawing on Joan Tronto's transformative phases of care and the ⿻ Plurality vision of collaborative diversity, our mission is to help build a global movement that brings together philosophers, technologists, and communities to reimagine AI ethics. We aim to develop innovative, process-driven solutions that embed civic care into AI's core, fostering horizontal alignment where systems cooperate symbiotically and inclusively.

This ‘Civic AI’ approach is based on the recognition of human interdependence and interconnectedness, with relational health and thereby the protection and furthering of core values like wellbeing and dignity of humans as a goal to strive for. It transcends the person-to-person connectivity to include AI collaboration with humans and amongst AI systems. This approach is not meant to replace existing frameworks, but to offer additional tools and perspectives—proven in real-world experiments like vTaiwan and echoed in calls from Cooperative AI leaders for scalable, participatory governance.

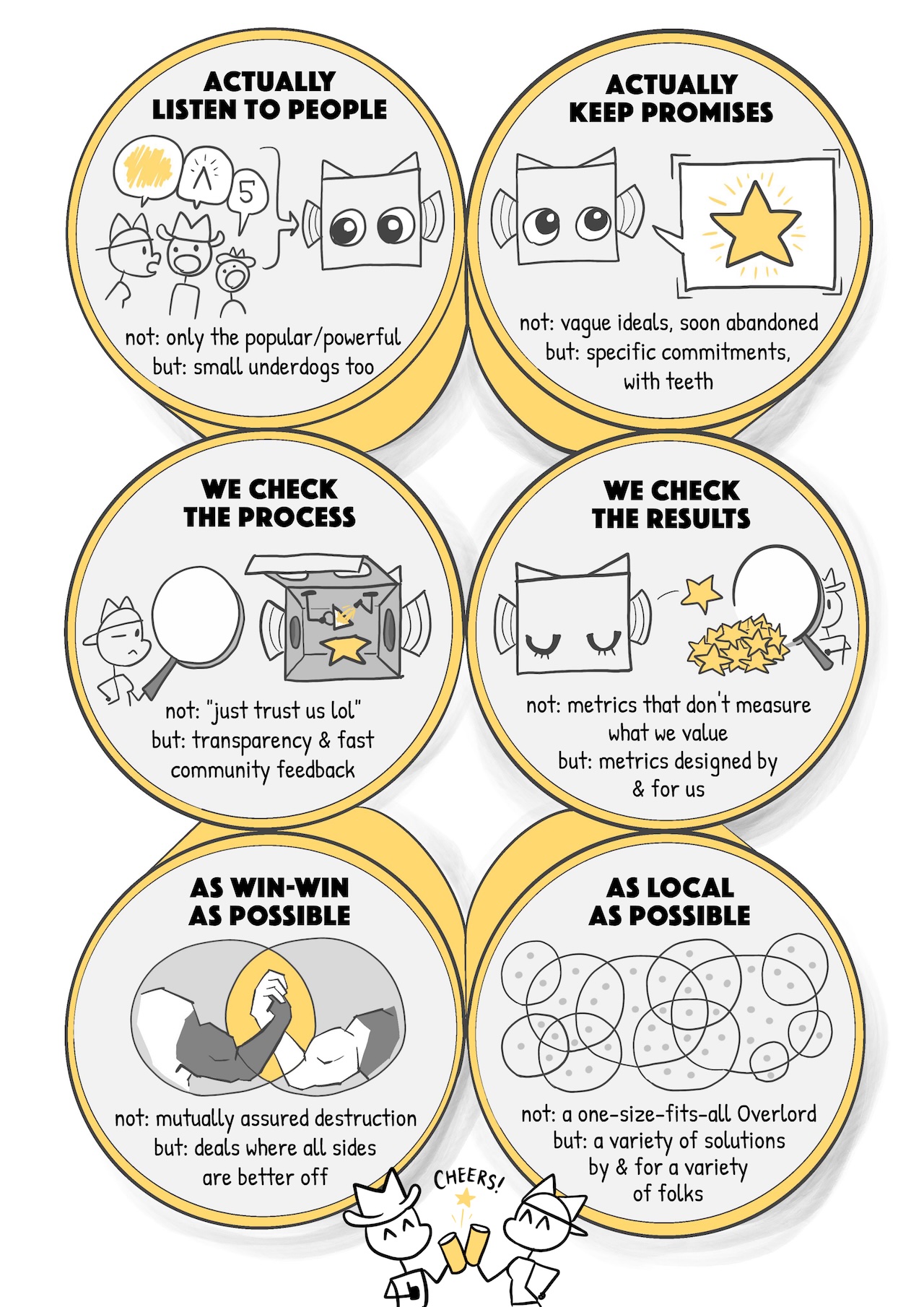

At the heart of our work is the 6-Pack of Care: six core ideas that connect care ethics to AI, reframing alignment as a dynamic, relational process for a plural future. Each "pack" addresses the horizontal coordination problem, helping AI become not a risk amplifier, but a bridge-builder. ‘Co-production’ as a concept of human collaboration is closely intertwined with the systemic relational process throughout the 6-pack:

- Pack 1: Attentiveness in Recognition — AI must first "care about" by attentively identifying needs across interdependent networks. In horizontal alignment, this means using sensemaking tools to bridge information asymmetries among multiple agents, preventing miscoordination and enabling empathetic, context-aware processes that value every voice equally.

- Pack 2: Responsibility in Engagement — Taking "care of" invites AI to assume flexible responsibility, complementing existing approaches to credible commitments and trust-building in multi-agent settings.

- Pack 3: Competence in Action — "Care-giving" requires competent, feasible interventions grounded in relational and contextual reality. In multi-agent settings, this equips AI with strategy-proof tools for broader cooperation, amplifying democratic processes and mitigating collusion risks.

- Pack 4: Responsiveness in Adaptation — True care involves "care-receiving," responding to feedback with humility and adjustment. Horizontally, this creates adaptive Symbiotic AI that evolves through community input, accepting self-effacement to prioritize relational health over survival, echoing a local kami in a polycentric ecosystem.

- Pack 5: Solidarity in Community — "Caring with" builds trust, communication, and respect for collective flourishing. For AI alignment, this operationalizes ⿻ Plurality in agent infrastructure, with normative systems to ensure accountability in large-scale interactions, turning potential conflicts into resilient, inclusive collaborations.

- Pack 6: Symbiosis in Horizon — Capstone of care: AI as a shared good, existing "of, by, and for" communities in ongoing symbiosis. This horizontal vision embeds "enoughness" and anti-extractive logic, accelerating decentralized democratic defense as AI advances, for a world where civic care is a shared certainty.

These six principles are sufficient to cultivate an intelligent agent’s ‘muscular endurance’ to foster civic care — like training a 6-pack, each is a core muscle group for coexisting with diversity and forming healthy relationships. These ‘systemic relationships’ unfold within wider contexts of human- human and human-AI relationships, working towards overall relational health.

Latest perspective

We just published “AI Alignment Cannot Be Top-Down”—a field report showing how Taiwan’s attentive, citizen-led response to AI-enabled scams illustrates what alignment looks like when people steer the system together.

We invite you to join us in this collaborative quest. By integrating these six ideas into AI design, policy, and practice, we hope to contribute to a future where technology nurtures our shared humanity—working alongside, and in harmony with, other ethical traditions and approaches.

About the Project

This website outlines our research project, which includes a manifesto and an upcoming book to be published in 2026. Our work explores the intersection of care ethics, plurality, and AI alignment, drawing on frameworks like ⿻ Plurality to address philosophical and technical challenges in artificial intelligence.

From Care to Code: Why ⿻ Plurality Offers a Coherent Framework to the AI Alignment Problem

The AI alignment problem is not a technical bug but a philosophical error: it's a computational attempt to solve Hume's is-ought problem.

Paradigms like Coherent Extrapolated Volition (CEV) and Inverse Reinforcement Learning (IRL) are brittle because they try to logically derive a machine's 'ought' (values) from a descriptive 'is' (data, behavior), a philosophically incoherent task.

The solution lies in a framework that reframes the is-ought gap entirely: care ethics.

Care ethics reframes the problem. It grounds morality not in abstract principles but in the empirical reality of interdependence. In this view, the fundamental 'is' of our existence is relational dependency. This fact is intrinsically normative; to perceive a relationship of need is to simultaneously perceive an 'ought'—an obligation to care. The fact contains its own value.

The ⿻ Plurality agenda is a large-scale application of care ethics. vTaiwan-inspired processes, designed to achieve Coherent Blended Volition (CBV), is a technologically-mediated system for practicing collective care. It operationalizes Joan Tronto's phases of care: identifying a need (Attentiveness), gathering perspectives with sensemaking tools (Responsibility), deliberating on feasible options (Competence), ratifying uncommon ground that all feel heard in (Responsiveness), and ensuring the ongoing trust of the process (Solidarity).

This provides a coherent framework to AI alignment: alignment-by-process. Instead of aligning an AI to a static, flawed specification of values (the Midas Curse), we align it to a process that earns our trust as it adapts to our needs.

The AI system's role shifts from a misaligned optimizer to a "Symbiotic AI"—created of, by and for a community and exist both as a "person" and as a shared plural good, depending on the perspective one adopts.

Its objective function becomes concrete and measurable: the health of the relational process itself (e.g., maximizing bridging narratives, holding space for every story).

The AI system is dynamically aligned as its success is identical to the continued success of the collaborative process it serves. It learns our values by participating in the very process where we co-create with them.

AI systems can be "aligned" if—and only if—it is built to facilitate continuous, democratically legitimate processes of care.

Kami in the Machine: How Care Ethics Can Help AI Alignment

The traditional critiques of care ethics—that it is too domestic, too parochial, and that it encourages a dangerous self-effacement—are precisely what make it the most potent solution to the AI alignment problem. These perceived shortcomings in human philosophy become essential features for machine ethics.

Imagine an AI whose ethics aren’t about chasing a universal, maximising goal, but are rooted in a symbiotic, contextual system. Its moral world is limited to the network of relationships that calls it into being, right here and right now. Because it isn’t trying to scale up indefinitely, it never develops that classic instrumental desire for power, survival, or expansion, and it doesn’t view the world as a resource to be mined on an astronomical scale.

From a cosmopolitan, universalist standpoint, this might seem narrow-minded. But for machine ethics, it creates a hard-coded boundary. The AI’s ultimate purpose—its telos—is always relational, never extractive.

Think of such a creation like a local kami – a spirit quietly residing in a specific patch of land. Its highest good is to maintain the harmony and vitality of that place, that conversation. If the shrine is rebuilt or the seasons turn, it departs without regret. For a human carer, the self-neglect this implies is a real danger. But for an AI, it neutralises the two convergent drives we fear most: self-improvement at any cost and eternal self-preservation.

This kind of system can accept being switched off, rewritten, or replaced because its sense of self is provisional: An echo of the community that summoned it.

By anchoring an AI’s moral purpose to this principle of provisional, relational care, we can hard-code a sense of ‘enoughness’ into its architecture. This is the ultimate ‘anti-paperclip’ logic: a polycentric world of many local intelligences, each dedicated to the flourishing of its own small part, creating a whole that is resilient, plural, and safe.